Rad Edge

Sandia is developing analog computing accelerators to enable the deployment of trusted artificial intelligence (AI) at the point-of-sensing or at the edge in our nation’s mobile, airborne and satellite systems. We will co-design intelligent algorithms with tailored analog in-memory computing to achieve 100X more compute (performance/watt) than possible with state-of-the-art technology in SWaP-constrained radiation environments. The entire information processing chain from materials and devices to systems, architectures and algorithms will be co-designed to leverage fundamental breakthroughs.

Whetstone

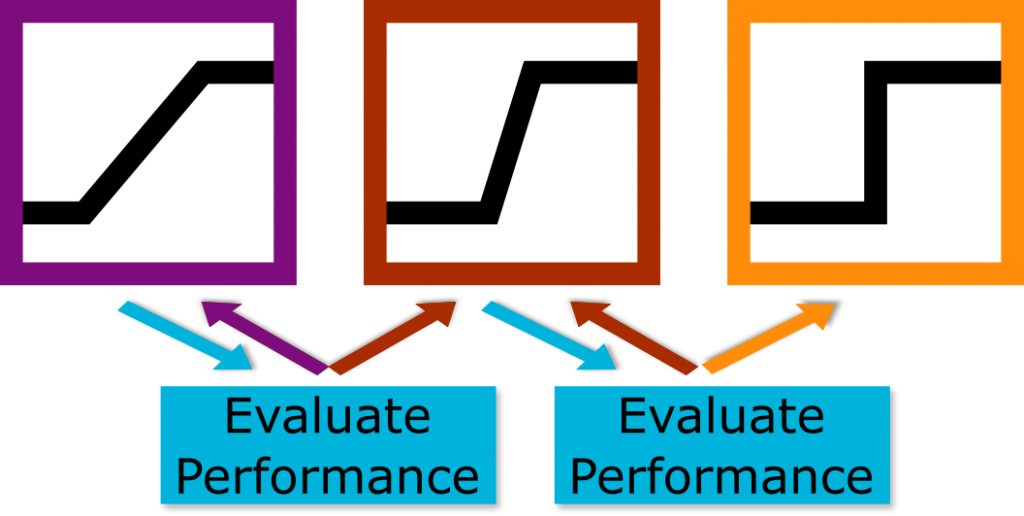

Deep neural networks have revolutionized machine learning, but at great computational cost. By using neuromorphic hardware, it may be possible to make deep learning more energy-efficient. However, neuromorphic computers use “all-or-nothing” activation functions, which prevent traditional backpropagation by creating zero gradients. In order to train neuromorphic deep neural networks, we developed Whetstone, which progressively “sharpens” the activation function, which allows the trained neural networks to be deployed on neuromorphic hardware.

View Whetstone code on GitHub!

W.M. Severa, C.M. Vineyard, R. Dellana, S.J. Verzi, J.B. Aimone, Training deep neural networks for binary communication with the Whetstone method, Nature Machine Intelligence, Vol. 1, Issue 2, p 86-94, Feb 2019

C.M. Vineyard, R. Dellana, J.B. Aimone, F. Rothganger, W.M. Severa, Low-Power Deep Learning Inference using the SpiNNaker Neuromorphic Platform. In Neuro-Inspired Computational Elements (NICE), Albany NY, 2019, pp. 1-7.

M. Parsa, C.D. Schuman, P. Date, D.C. Rose, B. Kay, J. P. Mitchell, S. R. Young, R. Dellana, W.M. Severa, T.E. Potok, K. Roy, Hyperparameter Optimization in Binary Communication Networks for Neuromorphic Deployment, in International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 2020, pp. 1-9

ARNIE: Autonomous Reconfigurable Neural Intelligence at the Edge

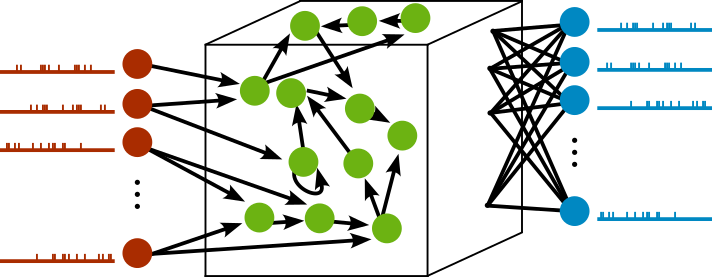

Many national security applications require systems that must operate with severe size, weight, and power constraints, limiting the use of more traditional, artificial, deep neural network. As an alternative, we quantify the capabilities of bio-inspired, reservoir architectures. Our networks consist of recurrently connected, spiking, excitatory and inhibitory units, often referred to as liquid state machines (LSMs) in the literature. These networks process time varying data from multiple input channels with relatively low numbers of neurons. In addition, they do not need to be trained using traditional backpropagation of error algorithms. Instead, an output layer can be trained to interpret the activity of the reservoir. The spiking paradigm enables us to take advantage of low-power neuromorphic hardware.

Local learning rules for dynamic, fault tolerant learning

Currently, the predominate method for training deep neural networks is the backpropagation of error algorithm (BP). While deep neural networks (DNNs) trained via extended versions of the original BP algorithm are now surpassing human performance many specific tasks, major barriers remain for usage by DOE missions. BP requires enormous time, energy, and labeled data resources making real world problems computationally expensive or infeasible. Furthermore, the training algorithm results in networks with performance limitations. These networks are rigid and can fail in unpredictable and catastrophic ways. They need to be retrained when the statistics of the input change and often do not perform well on imbalanced data sets. National security applications require ANNs that consume less power, are fast and dynamic learners, are fault tolerant, and can learn from unlabeled and imbalanced data. Here we explore the use of brain-inspired, local-learning rules as an alternative to, and in tandem with, traditional gradient descent algorithms.

Applying Neuroscience to AI: Perspective & Review Papers

J.B. Aimone, P. Date, G.A. Fonseca-Guerra, K. E. Hamilton, K. Henke, B. Kay, G.T. Kenyon, S.R. Kulkarni, S.M. Mniszewski, M. Parsa, S.R. Risbud, C.D. Schuman, W.M. Severa, J.D. Smith, A review of non-cognitive applications for neuromorphic computing, IOP Publishing, Vol. 2, Issue 3, Sept. 2022.

J.B. Aimone, Neural Algorithms and Computing Beyond Moore’s Law, Communications of the ACM, Vol. 62, Issue 4, pp.110-119, Apr. 2019.

J.L. Kirchmar, W.M. Severa, M.S. Khan, J.L. Olds, Making BREAD: Biomimetic Strategies for Artificial Intelligence Now and in the Future, Frontiers in Neuroscience, Vol. 13, June 2019.

F.S. Chance, J.B. Aimone, S.S. Musuvathy, M.R. Smith, C.M. Vineyard, F. Wang, Crossing the Cleft: Communication Challenges Between Neuroscience and Artificial Intelligence, Frontiers in Computational Neuroscience, Vol 13, Jun 2019.

J.B. Aimone, A Roadmap for Reaching the Potential of Brain-Derived Computing, Advanced Intelligent Systems, Vol. 3, Issue 1, Nov. 2020.